During my recent visit to Warwick University I had the pleasure of working with Maxim Sviridenko. I had known many of his papers, since we both work on problems related to TSP. However, I have not met him before. One thing I learned from my visit is that Maxim is a really nice guy, another is that he is really fond of scheduling problems. It is no surprise then, that we started working on a problem that sits right in the intersection of scheduling and TSP related problems, i.e. no-wait flow shop scheduling (NWFS). There were not too many results known for this problem, in particular no hardness of approximation results. However, a PTAS was known for a constant number of machines, and the general version was known to be a special case of ATSP. Since we only know an O(log n/ log log n)-approximation algorithm for the latter, NWFS seemed to be a very attractive target, a possible first step towards obtaining better results for ATSP. In particular, it is for this reason that NWFS was mentioned in the open problem list of Shmoys and Williamson in their book on approximation algorithms.

As you no doubt have already guessed, either from the title of this post, or from the past tense in one of the previous sentences, it did not exactly work as planned. However, we got some really nice results on the way. It turned out, that not only is NWFS APX-hard, but it is also ATSP-hard. The latter means that c-approximation for NWFS (where c is possibly a function of the number of jobs n) implies c(1+?)-approximation for ATSP for any ?>0. Given the existence of a PTAS for a constant number of machines, this is a rather surprising result. I will try to present the core idea here, which I believe is quite elegant, without engaging in too many technical details.

No-wait flow shop

In the no-wait flow shop problem, you are give a set of n jobs J_1,...,J_n on m machines M_1,...,M_m. Each job is a sequence of m operations, one for each machine, with processing time t_ij for i-th job on j-th machine. As usual, you are supposed schedule all the jobs and minimize the total makespan of the schedule. The unique features of this problem are the following:

- Operations of a single job have to be processed in a fixed order: first the operation on the first machine, then the one on the second machine, and so on.

- Each machine has to process the jobs in the same order (so-called permutation scheduling). It is useful to think about machines as lined up in a sequence, and the jobs arriving at one end and leaving at the other, with no overtaking.

- Finally, once a job is started it has to go through all machines without pausing (hence 'no-wait' in the problem name).

Because of the no-wait constraint, this problem can behave in a very unexpected manner. For example, speeding up one of the machines can result in increasing the optimum makespan! (see Spieksma, Woeginger The no-wait flow-shop paradox)

From NWFS to ATSP

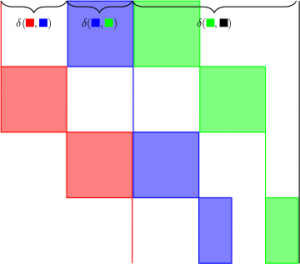

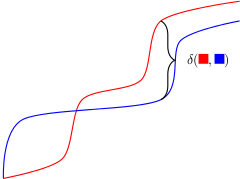

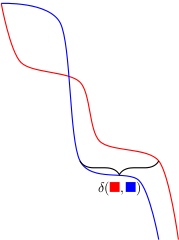

There are many distance functions that can be defined on no-wait flow shop jobs. One possibility is to define ?(i,j) as the minimum time we need to wait to start J_j after starting J_i. This is illustrated in the figure below.

The set of all jobs with the distance function ? defines a semi-metric (almost, ?(i,i) <> 0 but that is easy to fix). Schedules correspond to paths in this semi-metric. The makespan of a schedule is equal to the length of the corresponding path + the total length of the last job. To handle the last job we do what the picture suggest, i.e. add a dummy job consisting of all zeros and then reduce to ATSP (and not ATSP path!).

From ATSP to NWFS

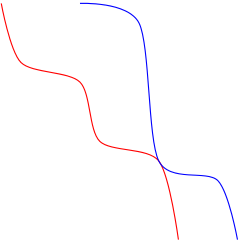

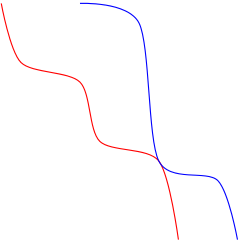

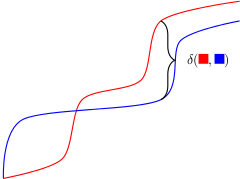

To see how one can go in the opposite direction, imagine a flow shop instance with a very large number of machines and very small processing times for each operation. Then the picture looks more like this.

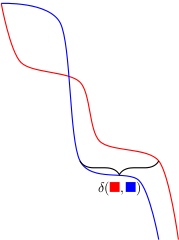

There is now a second way to (approximately) measure ?(i,j). Simply align the starting points of both jobs and look for a point where J_j is the most to the left of J_i.

If we now rotate the picture, we can see that the jobs really are just monotone functions and for two such functions f, g, we have ?(f,g) = max(f-g).

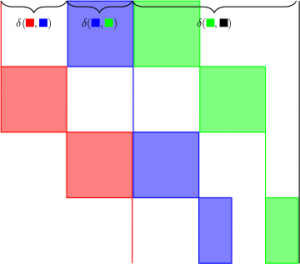

Now, here is the key fact - the function space discribed above is a universal space for all semi-metrics. More precisely: Any n-point semi-metric embeds isometrically in R^n with distance defined as ?(x,y) = max(x,y). This result is a "directed" version of the universality of l_? for metrics and the proof is almost identical.

To get actual hardness results one needs to control the number of machines and embedding errors. Also, one needs to somehow handle the last term in the flow shop objective function that is not related to ?, i.e. the length of the last job. But what the above considerations already show that no-wait flow shop distance functions are really no easier than general semi-metrics, which is the heart of the argument.

Over? Not quite...

Is this the end of story? Most likely yes, if one is only interested in using NWFS to attack ATSP. However, the approximability of NWFS itself is still wide open. On one had, we have a PTAS, on the other we give a O(log m)-approximation algorithm in our paper (a variation on Frieze, Galbiati, Maffioli). What happens for moderate values of m, say m=log n, remains a mistery.