The Unique Label Cover problem (ULC for short) is the problem that the Unique Games Conjecture is about: The task is to label each vertex of a given graph with a letter from an alphabet, given constraints on edges. Each edge of the graph comes with a bijection that tells how a label on one endpoint should be translated into a label on the second endpoint. Clearly, it's trivial to verify whether all constraints may be satisfied at once; the true goal is to violate as few edges as possible. The Unique Games Conjecture treats about hardness of determining the fraction of edges that may be violated; in this post I'm going to focus on instances where we would violate only very few constraints. In other words, I'm going to study fixed-parameter algorithms for ULC parameterized by the number of violated constraints (denoted k).

The main claim of our FOCS'12 paper is that ULC problem can be solved in O(sO(k2) nO(1)) time for n-vertex graph and alphabet of size s. This gives that ULC is polynomial-time solvable for constant alphabet and k=O(sqrt(log(n))), improving the obvious k=O(1) algorithm. And the main technique used is very simple: If you don't know what to do, randomly contract (equivalently, make undeletable) each edge independently with probability 0.5 - maybe something interesting will be highlighted in the graph with sufficiently high probability.

What does it mean sufficiently high probability? As we are aiming at time FPT in s and k, probability of the form 1/f(s,k) is fine for us.

So, what can nice happen with such a step? First, we can observe that this is a very easy way to find good separators to recurse in Kawarabayashi-Thorup way. Without going into technical details, if we find a small (of size f(s,k)) cut that separates two large (of size at least g(s,k)) connected subgraphs, we can recurse on one of the sides. With 2-f(s,k)-2g(s,k) probability we won't contract edges of the cut, while contracting a large (of size g(s,k)) connected subgraph of each side of the cut. After such operation, if two vertices of "weight" at least g(s,k) can be separated with a cut of size f(s,k), we've found a good separation.

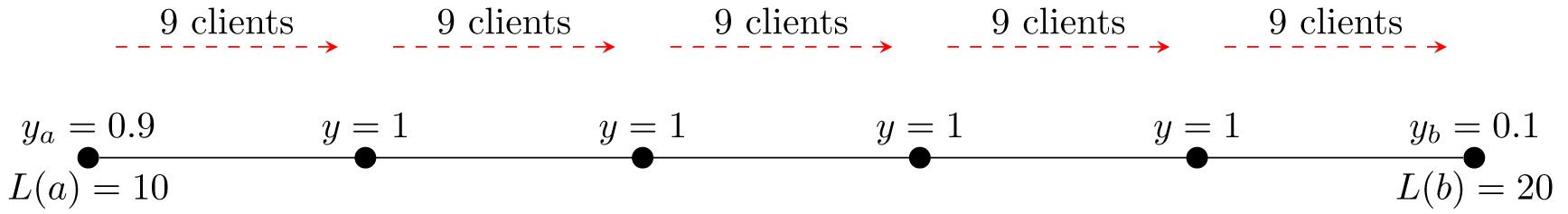

This step needs to augment the problem with border terminals and pay attention to a few details, but the overall message is: from now on we can assume that each small (in the ULC case if suffices to say here "of size at most f(s,k)=2k") separator leaves one side of small size (in the ULC case of size roughly g(s,k)=sk).

Let X be a solution we're looking for. We know that in G-X there is one huge connected component, and at most k (as X is of size at most k) small connected components. What do we do now? Apply the trick again! With sufficiently high probability:

- each edge of X is not contracted;

- each small connected component is contracted onto one vertex;

- for each edge e in X and each its endpoint v that is contained in the large connected component of G-X, there are at least g(s,k) vertices contracted onto v.

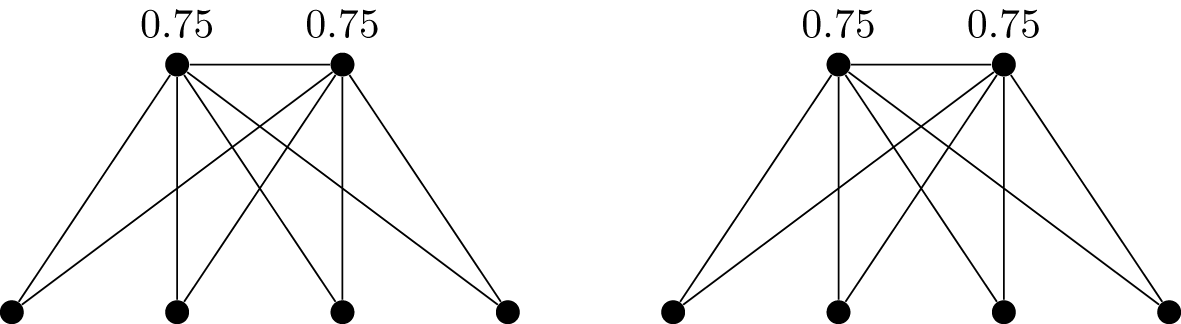

The core observation now is as follows: assume the above, and let v and w be two heavy vertices: that is, on both v and w we have contracted at least g(s,k) other vertices. Since we cannot recurse, there are at least 2k+1 edge-disjoint paths between v and w; each such path gives exactly one way to translate a label of v into a label of w. With a budget of k violated constraints, we can cut only k such paths; thus, for each label of v, there is at most one reasonable label of w - the one that is yielded by a majority of paths. Thus, there are only s ways to label all heavy vertices. The rest of the algorithm is just a matter of a few small observations which we won't describe in details here.

Summing up, we found the above trick interesting, with one - not so often in the fixed-parameter tractability world - property that it is capable of handling weighted versions of the problem: it is not hard to generalize the above idea to find minimum cost set of violated constraints of size at most k. Naturally, there are a few technical devils hidden in this approach - that were sufficient to blow up the paper to 58 pages on arxiv - but still, it enabled us also to improve the k-Way Cut algorithm of Kawarabayashi and Thorup, as well as give an alternative FPT algorithm for Multiway Cut-Uncut.

We conclude with a summary of what properties are necessary to apply this approach - in case you would like to use it to you favourite problem.

- First, this approach needs to be some sort of graph deletion problem, with a possibility to bypass (or make undeletable) vertices or edges

that are not to be deleted.

- To make a recursive step, we need that there are only limited number of ways a solution can behave on a separator. In our algorithm we've used the fact that, in a recursive step, we need only to take care of the labeling of the separator (sp choices for a separator of p vertices). This is closely related to the notion of finite integer index.

- Finally, we need to make use of the knowledge that in the final step, the graph has very good connectivity - in the sense that a small separator can remove only a small part of the graph. In the ULC case we've observed that parts with pairwise large (of size 2k+1) flow between them have a very limited number of ways they can be labeled. This part is problem-specific, and, from our experience, is the hardest part of the algorithm.

Let's make an example of the vertex k-way cut problem, which is known to be W[1]-hard, and see what breaks in the above approach. In this problem we're given a graph and integers k and p, and we're to delete p vertices to obtain at least k connected components (we parameterize by both k and p). It is not hard to see that the recursion works perfectly well: we need to remember only how does the border vertices split into components, and how many connected components were already created. However, the last step is hard; if you look at the hardness proof, the constructed instance has already strong connectivity - you can remove only isolated vertices by small separators - but it is still capable of encoding a clique instance.

A reverse situation happens in the Bisection problem, where we're to delete k edges (k is the parameter) to obtain two halves of n/2 vertices. Here the final step seems easy, and boils down to a variant of the knapsack problem. However, in the recursive step we need to remember the balance between the parts of the graph - which gives a linear in n number of choices - and the approach breaks down. To the best of our knowledge, fixed-parameter tractability of Bisection remains open.